One of the most problematic sides of using the latest Nvidia GeForce RTX 30 series GPUs for mining Ethereum is the fact that their video memory gets really hot and up until recently there was simply no tool to give you an idea how hot that is. Thanks to the latest HWiNFO version 6.42 you can now monitor the operating temperatures of the GDDR6X video memory of your RTX 3060 Ti, RTX 3070, RTX 3080 and RTX 3090 GPUs and take the appropriate measures to keep things cool for ensuring maximum performance and problem free operation on the long run. Have in mind that you might actually be quite surprised when you see the actual numbers being really high, but that is to be expected, considering the fact that even the surface of the backplate of the video card gets quite hot when we touch or measure it.

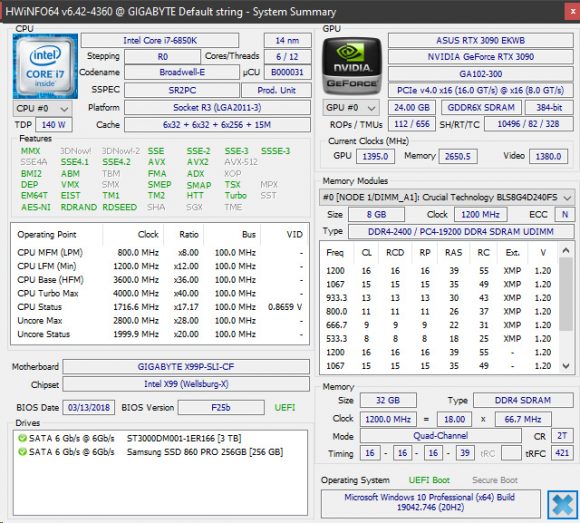

Just download and run the latest HWiNFO, you can even use the portable version, go to Monitoring and start Sensor status and then scroll down to the GPU data and see what you get reported as GPU Memory Junction Temperature data (right under the GPU Temperature). On the left image you can see the idle video memory temp we see on an ASUS EKWB GeForce RTX 3090 GPU and on the right the temperature of the video memory after we run PhoenixMiner with the tweaked RTX 3090 in order to give us 120 MHS hashrate for Ethereum mining. We get 36 degrees idle temperature, but when mining it bumps up to 92 degrees Celsius and this is a water-cooled GPU with the GPU temp going just about to 50 C under load.

A note regarding the new GDDR6X Memory Junction Temperature:

Just like in case of Navi, this is not the external (case) temperature, but internal junction temperature measured inside the silicon.

So don’t be scared to see higher values than other common temperatures, it’s expected. Also the limits are set respectively higher (throttling starts around 110 C).

EDIT: Adding that the value reported should be the current maximum temperature among all memory chips.

Checking the backplate of the water-cooled RTX 3090 with a FLIR thermal camera showed that at stock settings when mining you get around 72.8 C (the hottest spot) and with overclocked memory the hottest spot on the backplate where the memory chips are is 75.8 C. No wonder we got such high temperatures measured considering that the actual operating temperature of the memory is 92 degrees under the backplate. So, adding extra cooing fans on top of the backplate is definitely a must if you plan on using RTX 3090 for mining Ethereum. The same also goes for RTX 3080 when used for mining, though with the RTX 3070 and 3060 Ti the situation could be better due to the lower power usage they have, especially when optimized for mining. We need to further explore the memory temps with these two, meanwhile if you check what temperatures you are getting with HWiNFO on your GPUs feel free to share results in the comments below.

Update: Using HWinFo 6.42 to see what the GDDR6X temperature on a Palit GeForce RTX 3070 GameRock GPU is has ended without success. It seems that the latest HWiNFO might still not able to read the temperature on all GeForce RTX 30 series of GPUs with GDDR6X video memory, so the GPU Memory Junction Temperature data might not be available for all cards!

– To download and try GDDR6X monitoring with the free HWInfo 6.42 diagnostic software…